DQN (Deep Q-Network) 算法

最新更新于: 2024年6月18日上午10点14分

DQN (Deep Q-Network)

Algorithm 算法

DQN is based on Q-Learning, it changes the estimate of the Q function from “grid search” to “network search”. It’s an off-policy algorithm, which allow us to store past tracks in memory and replay them during model training. Based on optimal Bellman equation and TD method to update model, the optimal Bellman function is:

DQN基于Q-Learning算法,它将对Q函数估计,从“网格搜索”改为“网络搜索”。这是一种离轨算法,因此我们可以将过去的轨迹存储在记忆中,并在训练模型时进行回放。模型的更新基于最优Bellman方程和TD方法,最优Bellman方程如上所示。

Implementation 具体步骤

-greedy policy -贪心策略

We use -greedy policy as action policy to interact with the environment, and store the tracks in memory.

我们使用-贪心策略作为行动策略,用于和环境的交互,并将交互的轨迹记录在记忆当中。

Let the action space , the following is -greedy:

where is our Q value networks.

Memory 5-tuple 记忆五元组

Assume we get a 4-tuple from the environment , let terminal signal (if is terminal then , else ), combining this with 4-tuple give 5-tuple . Define for the memory set, store all the 5-tuple when action policy interact with the environment.

假设我们从环境交互中得到四元组 ,令终止信号为 (如果 终止,则 ,否则 ),将其和之前的四元组组合得到五元组 。令 为记忆集合,存储行动策略得到的所有五元组。

Model definition 模型定义

We define the state space is and action space is . The model is used to approximate Q-value (Action value) function, denote the model as , where is the weights of the model. Although the Q-value function is ,we use the a-th dimension of outputs to approximate it.

我们将动作空间记为 ,动作空间记为 ,模型将用于近似动作价值函数,记其为 ,其中 为模型的权重。虽然动作价值函数是 ,我们将用模型输出的第 个维度信息 去近似它。

Model training 模型训练

We would set a start_fit_size at /agents/constants/DQN.py, means to start training only when the number of samples in memory exceeds start_fit_size, and train after each step.

我们在 /agents/constants/DQN.py 中设置了 start_fit_size 变量,其表示只有当记忆中的样本数目超过该大小时才开始训练,并在每次执行完一步后训练。

Training process 训练流程:

- Random a batch of 5-tuple from memory .

- TD target: .

- Loss: where is TD error.

- Update: .

Environment test 环境测试

DDQN算法就是在DQN的基础上加上了目标网络,并在一段时间后将权重更新到目标网络上,DDQN的效果远优于DQN算法,KataRL中的DDQN的核心代码ddqn_jax.py,在不同环境和其他算法比较的测试结果,ddqn超参数调整性能比较。测试代码:

python katarl/run/dqn/ddqn.py --train --wandb-track

python katarl/run/dqn/ddqn.py --train --wandb-track --env-name Acrobot-v1Cartpole 平衡木

Cartpole environment information - Gymnasium

Hyper-parameters 超参数

Agent

-

model optimizer: Adam, learning rate

-

discount rate

-

-

memory size

-

start fit size

-

batch size

Environment

- positive reward

- negative reward

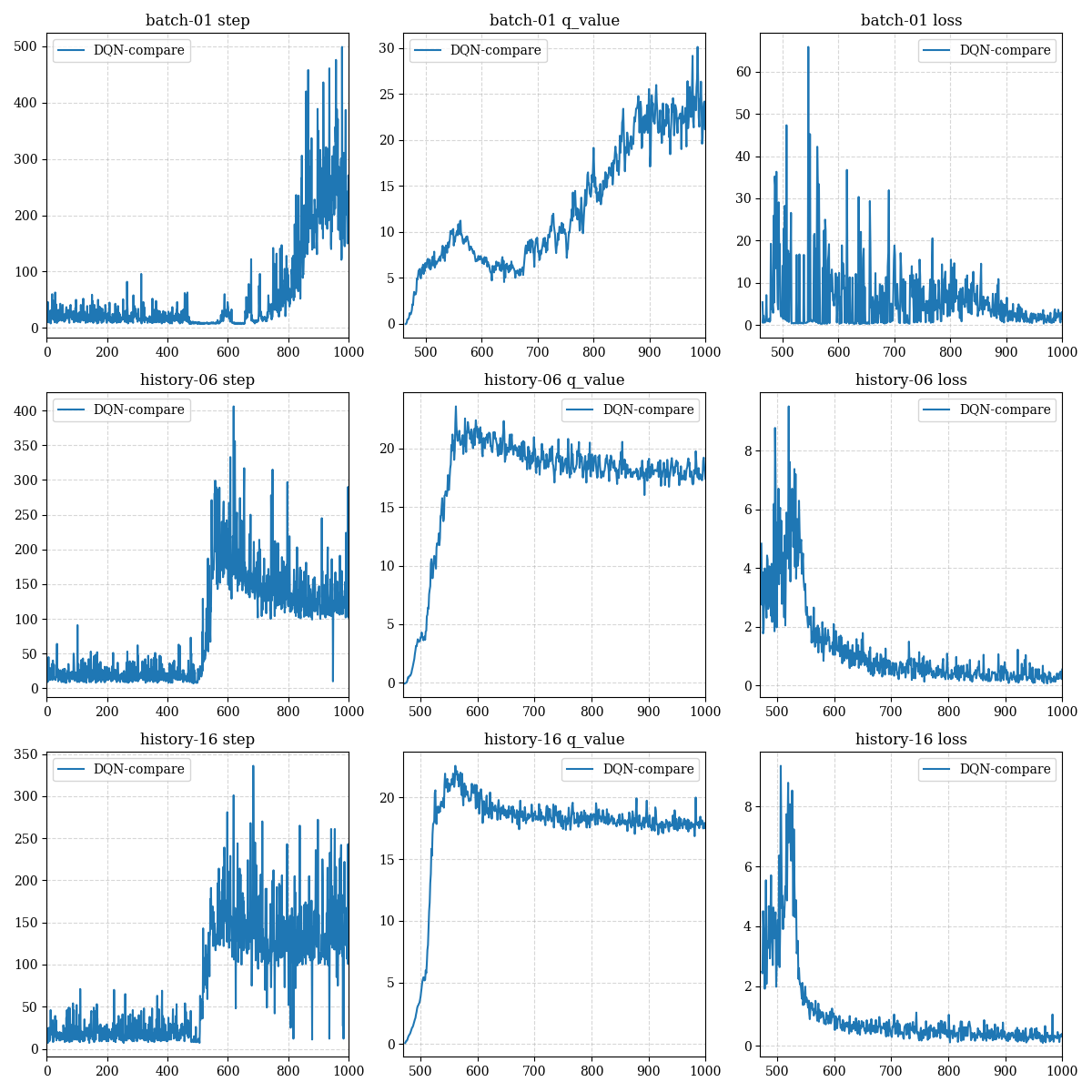

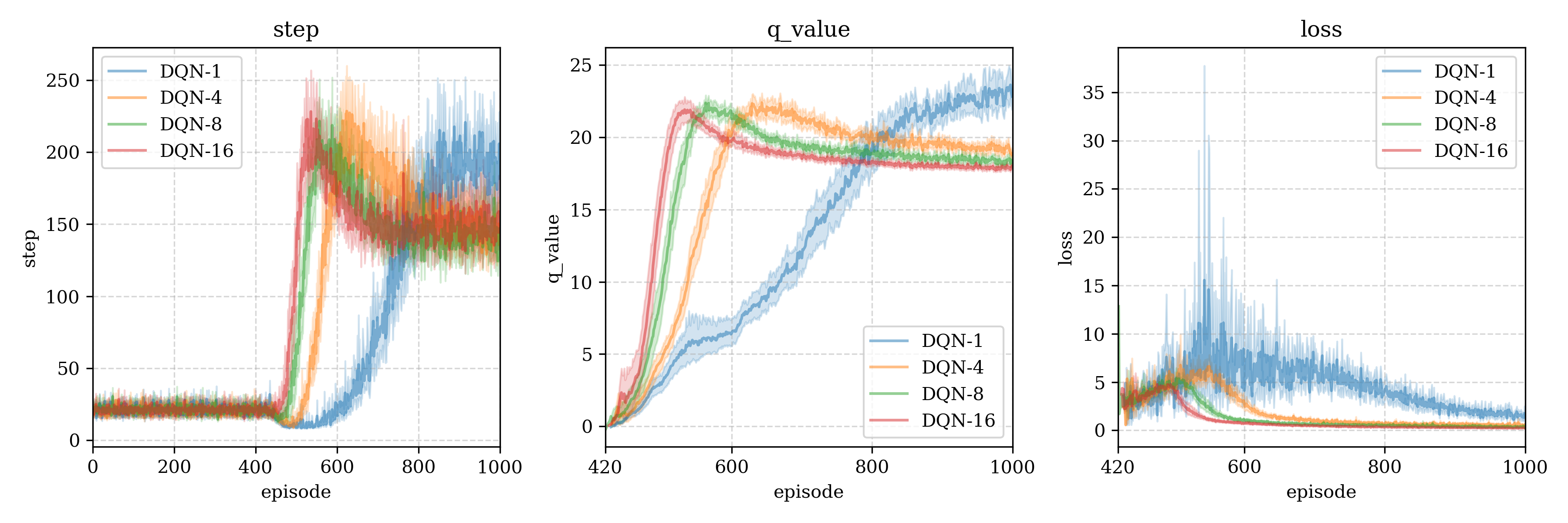

测试结果1(batch size to the best)

We test different batch size, interestingly, only small batch size can reach the maximum number of steps (500 steps):

我们尝试了不同的batch size大小,有趣的是,只有当小的batch大小才能达到最优步数(500步):

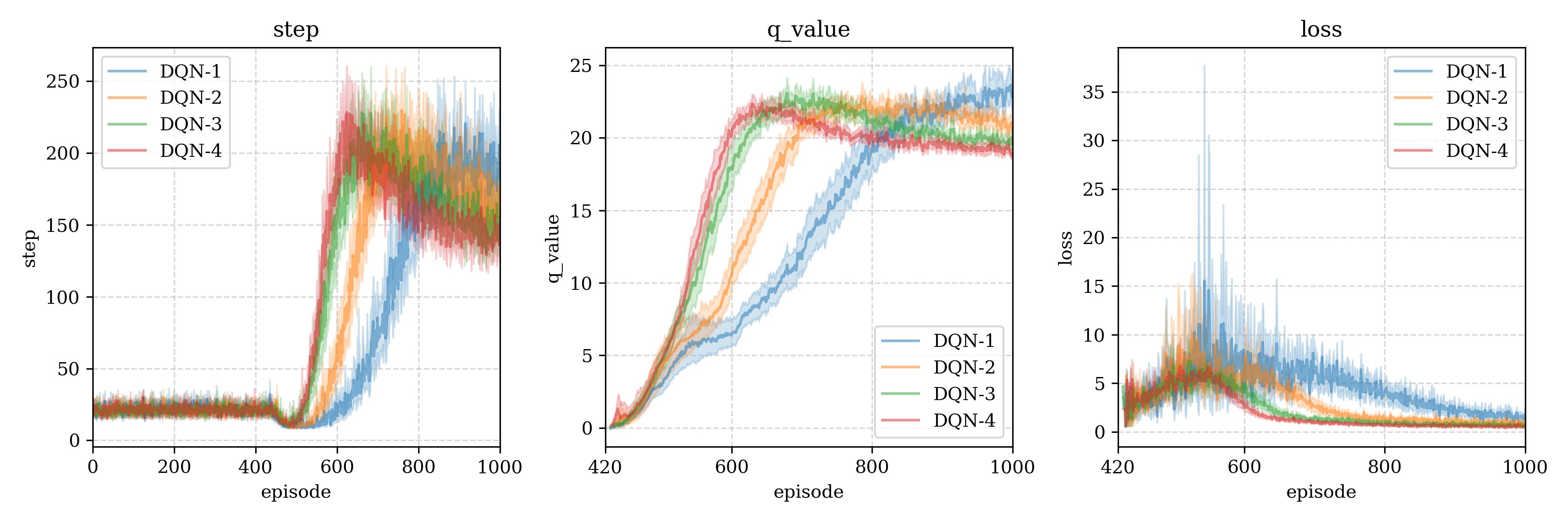

测试结果2 (batch size merge)

Following figures is average of multi-test with means and 95% confidence range (each test reset 30 times)

下图是多次重启得到的均值即95%置信区间(每一个重启30次):

DQN- means the batch size is ,DQN-i表示使用了batch size为i进行测试。

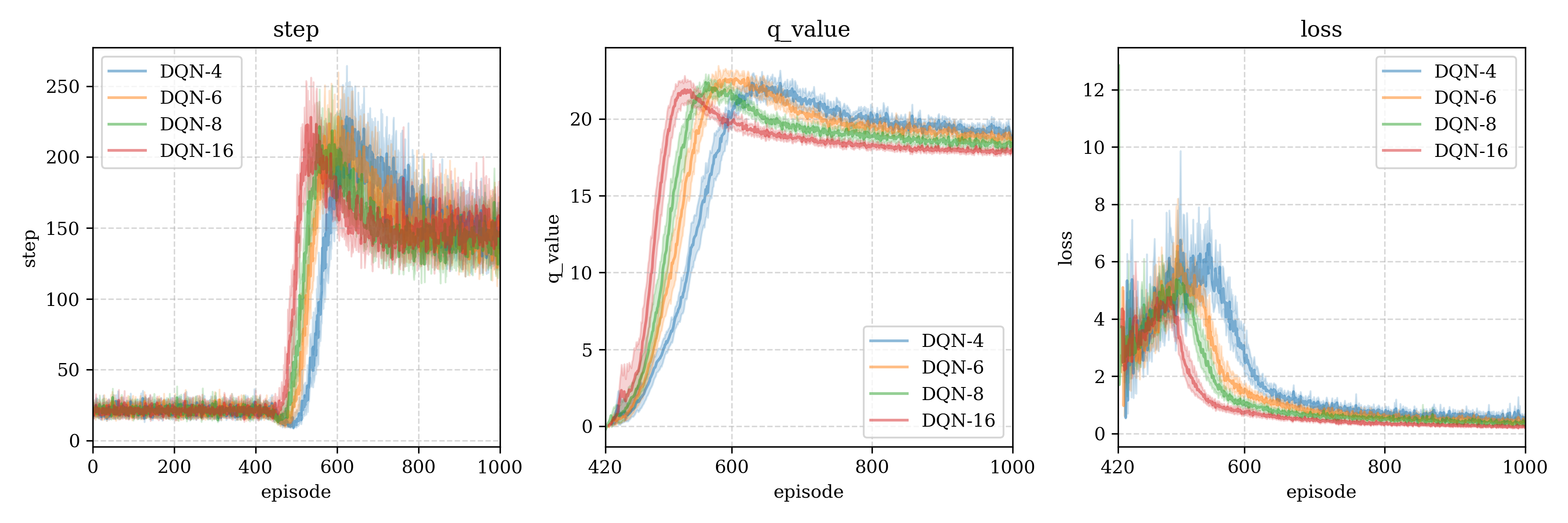

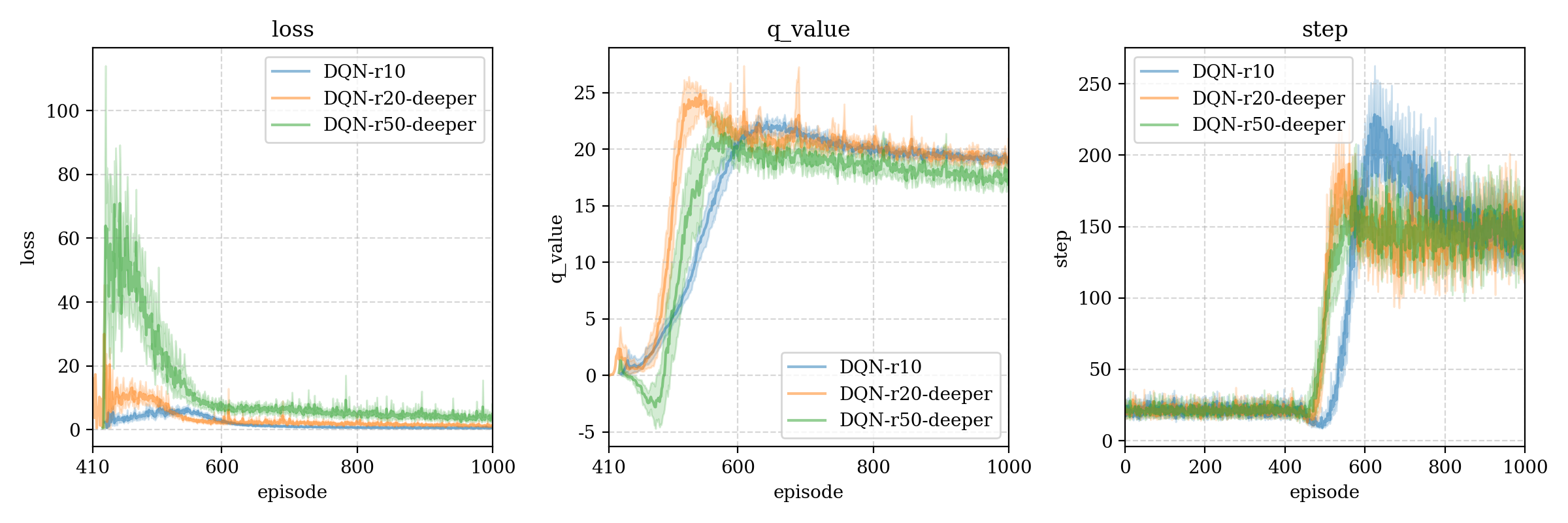

测试结果3 (model structure)

We build a deeper model: Input(4) - Dense(128) - Dense(64) - Dense(16) - Output(2), we compare this with the origin model, found that the deeper is also same or bad than origin model.

r10 > r20-deeper > r50-deeper

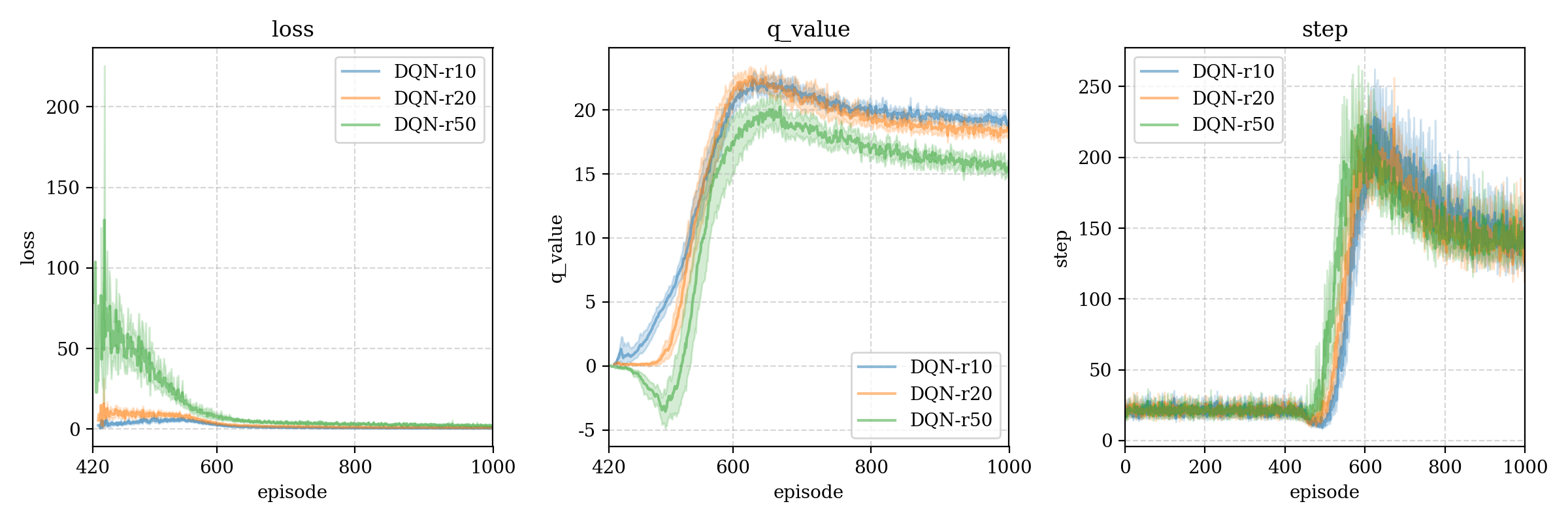

测试结果4 (negative reward)

We test different negative reward: -10, -20, -50, found there has little effect on step.

r50 > r10 > r20